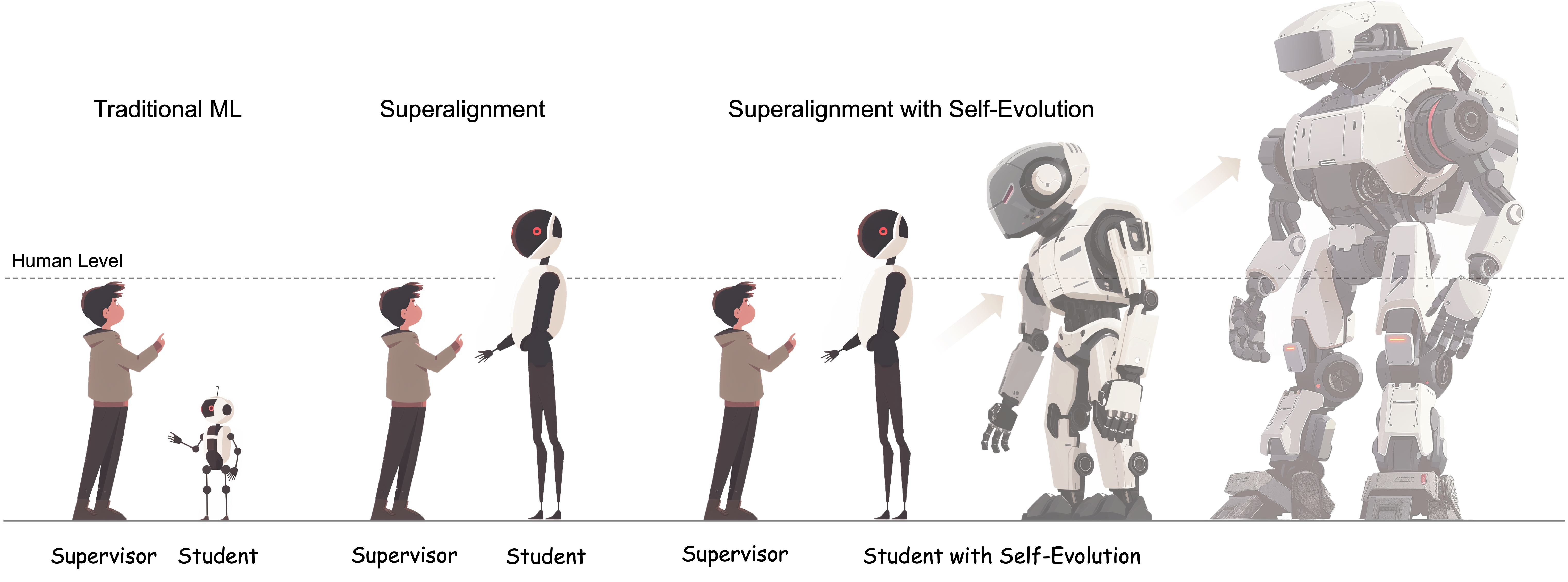

From Alignment to Super Alignment

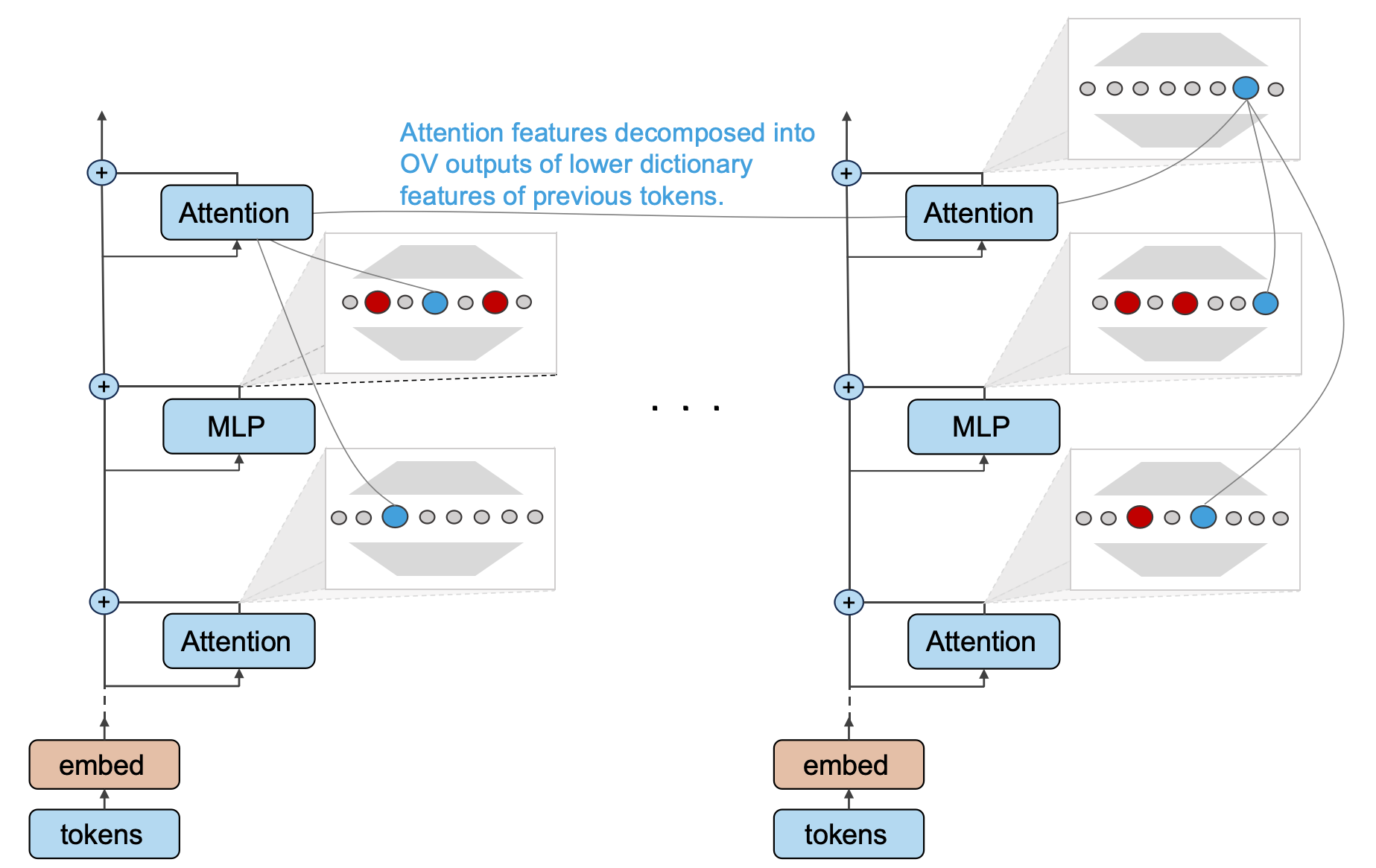

Alignment is an important phase in the training of LLMs. The OpenMOSS team researches alignment methods to construct helpful, harmless, and truthful AI assistants. Through weak-to-strong alignment, Self-Evolution, and other approaches, OpenMOSS aims to move towards the direction of super alignment.